Pure Storage at present unveiled FlashBlade//EXA, a brand new all-flash storage array designed to satisfy the demanding wants of AI factories and multi-modal AI coaching. FlashBlade//EXA separates the metadata layer from the info path within the I/O stream, which Pure says allows the array to maneuver information charges exceeding 10 terabytes per second per namespace.

FlashBlade//EXA is an enlargement of Pure Storage’s present choices, together with FlashBlade//S, its high-performance array for file and object workloads, in addition to FlashBlade//E, its massive scale array designed for storing unstructured information.

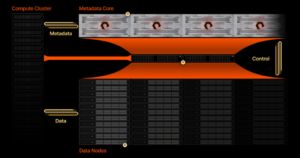

The brand new array splits the high-speed I/O into two components. The metadata is routed by the metadata core element of the FlashBlade//EXA, which relies on high-speed DirectFlash Module (DFM) nodes that home the corporate’s scale-out distributed key-value retailer. The metadata core nodes run on the Purity//FB working system, which has been bolstered with help for Parallel NFS (pNFS) to speak with the compute nodes.

Block information is individually routed over Distant Direct Reminiscence Entry (RDMA) to the info nodes, that are trade commonplace Linux-based servers with this launch (the corporate plans to include its DFM tech in a future launch). This structure permits FlashBlade//EXA to achieve the utmost allowable bandwidth between the info storage and the compute nodes.

“This segregation offers non-blocking information entry that will increase exponentially in high-performance computing eventualities the place the metadata requests can equal, if not outnumber, information I/O operations,” writes Alex Castro, a Pure Storage vp, in a weblog publish.

FlashBlade//EXA separates the metadata stream from the block information stream, eradicating an I/O bottleneck, Pure Storage says

When Solar Microsystems created NFS again in 1984, performance was the first focus, not efficiency, Castro says. Nonetheless, legacy NAS gadgets that require extra I/O controllers to be added with every new information node have created a bottleneck to efficiency. Splitting the I/O is the important thing to unlocking the bottleneck created by legacy NAS arrays, he says.

“Many storage distributors concentrating on the high-performance nature of huge AI workloads solely resolve for half of the parallelism downside–providing the widest networking bandwidth potential for purchasers to get to information targets,” Castro writes. “They don’t tackle how metadata and information are serviced at large throughput, which is the place the bottlenecks at massive scale emerge.”

Some storage distributors have resorted to utilizing specliazed file techniques, similar to Lustre, to ship the parallelism wanted for big scale tasks, Castro writes, however these environments had been liable to metadata latency and required Phd-level abilities to handle. On the opposite aspect, different distributors have inserted a compute aggregation layer between the compute purchasers and the info supply.

“This mannequin suffers from enlargement rigidity and extra administration complexity challenges than pNFS when scaling for large efficiency as a result of it includes including extra shifting components with compute aggregation nodes,” Castro says. “This rigidity forces information and metadata to scale in lockstep, creating inefficiencies for multimodal and dynamic workloads.”

Pure says it developed the FlashBlade//EXA to satisfy the rising wants of “AI factories,” and specifically the necessity to preserve hundreds of high-end GPUs fed with information.

When it comes to scale, AI factories sit within the center. On the low finish are enterprise AI workloads, similar to inference and RAG, that work on 50TB to 100PB of information, whereas AI factories will want entry to as much as 10,000 GPUs on information units from 100PB to a number of exabytes. On the excessive finish, hyperscalers can have upwards of 100EBs and greater than 10,000 GPUs. In any respect ranges, having idle GPUs is an obstacle to productiveness.

“Information is the gasoline for enterprise AI factories, straight impacting efficiency and reliability of AI purposes,” Rob Davis, Nvidia’s vp of storage networking expertise, mentioned in a press launch. “With Nvidia networking, the FlashBlade//EXA platform allows organizations to leverage the complete potential of AI applied sciences whereas sustaining information safety, scalability, and efficiency for mannequin coaching, advantageous tuning, and the newest agentic AI and reasoning inference necessities.”

Pure Storage says it expects to begin transport FlashBlade//EXA this summer season.

Associated Gadgets:

AI to Goose Demand for All Flash Arrays, Pure Storage Says

Why Object Storage Is the Reply to AI’s Greatest Problem

Pure Storage Rolls All-QLC Flash Array